The Biggest Revenue Lever Most Teams Ignore

1. RevOps is the glue that can take you from “little M” to “big M” marketing

RevOps Champions podcast, Beyond Random Acts: Building a Scalable Marketing Operating System (Jan 29, 2026)

TLDR:

Every growing company accumulates "random acts of marketing" (disconnected campaigns that worked early but won't scale)

Moving from "little M" marketing (just sales support) to "big M" marketing (full customer lifecycle) requires RevOps infrastructure

Building marketing maturity takes time: expect 12+ months to go from fragmented data to clear ROI visibility

Jennifer Zick founded Authentic in 2017 to solve a problem she'd lived: growing companies eventually look behind them and see a trail of disconnected marketing experiments. What got them here won't get them where they want to go.

The pattern every growth company follows

Businesses start founder-led and sales-driven. They experiment to find product-market fit, which creates scattered campaigns, inconsistent messaging, and no unified measurement. At some inflection point – new PE investment, generational handoff, or hitting a growth wall – they realize they need strategic go-to-market thinking.

"That's not a critique, that's just a reality for growing businesses," Jennifer explained. But breaking through requires moving from what she calls "little M" marketing to "big M" marketing.

Little M versus big M marketing

Little M is marketing as sales support: lead gen, demand gen, brand awareness… give us more at-bats. It usually lives awkwardly in the org chart, tucked under sales or handed to agencies without real ownership.

Big M is marketing supporting the entire customer lifecycle, including onboarding, retention, and expansion. Jennifer uses the visual of a "flipped funnel" or bow-tie: marketing's job extends far past the initial sale into ensuring customers succeed and renew. That's where healthy revenue comes from.

Why RevOps is the centerpiece

"RevOps is the glue," Jennifer said. "It's the heart of what makes the strategy possible."

At her own company, a marketing manager serves as their RevOps specialist, sitting at the center of everything they execute, ensuring data integrity, proper segmentation, correct automation sequencing. Without that operational backbone, you can't see results clearly, which means you can't improve.

She emphasized that nothing should be automated to the point where it feels inauthentic. That balance between efficiency and humanity requires the chemistry between CMO vision and RevOps orchestration.

Marketing maturity takes longer than expected

Jennifer's team uses a 20-attribute assessment to score marketing maturity, with scores ranging from 20 to 100 points. Clients typically start around 36 and reach 68 after a full year of work. No one has hit 100 because there's always room to improve.

"You don't just get from little M marketing to clear marketing ROI by flipping a switch," she said. Clients are always missing pieces of foundation, process, and data. Building those takes 12+ months of consistent effort.

The key metrics to track as you mature: LTV and CAC (the "big two"), NPS for customer advocacy, funnel conversion rates, time to close, and net revenue retention for subscription businesses.

The bottom line

If your marketing feels like random acts, that's normal, but not sustainable. Invest in RevOps infrastructure to connect your campaigns into a coherent system. Expect the maturity journey to take a year or more, and measure progress through both efficiency metrics and customer lifecycle health. The fundamentals haven't changed: know who you want to matter to, why you should matter, and how to build trust. You just can't answer those questions without strong ops underneath.

2. If your cold email fails, it’s most likely the system, not the copy

Growth Band webinar: Outbound Reinvented: Scaling Cold Email Without Burning Domains (Jan 29, 2026)

TLDR:

Separate your sending infrastructure by email provider – Google domains for Gmail recipients, Azure for Microsoft – to dramatically improve deliverability

Test hypotheses in batches of 100-500 contacts over six days, then use a decision tree to scale, pivot, or kill campaigns

Your goal isn't to generate leads, it's to build a repeatable system that shows exactly what works

Most B2B teams blame poor outbound results on bad copy or tough markets. But the real problem runs deeper: the entire playbook is outdated. According to Ilya Azovtsev from Growth Band, who manages over 15,000 mailboxes for clients, teams that adopt a systematic approach are seeing reply rates that others think are impossible, including 30% reply rates where 90% of recipients were on Microsoft.

Build infrastructure before writing a single email

The foundation isn't your message. It's where your message comes from. The framework separates your sending infrastructure completely:

Premium Google Workspace mailboxes with DNS shields on Cloudflare (one domain, three mailboxes, 15 emails per day) for Gmail recipients

Azure SMTP infrastructure (one domain, 10 mailboxes, five emails per day) for Microsoft recipients

Why the split? Email service providers trust messages from their own ecosystem. When you send from Google to Google, or Microsoft to Microsoft, your deliverability jumps significantly.

Most teams blast from a single infrastructure and wonder why Microsoft's spam filters are "brutal." The secret is they're only brutal if you're coming from the wrong place.

Use hypothesis testing instead of hoping for results

Stop running campaigns and hoping for results. Instead, treat outbound like a product experiment. Start with 100-500 contacts per hypothesis (not thousands).

After six days, apply a simple decision tree:

High reply rate but low interest means change your copy (the audience is responsive but your value proposition missed).

Low reply rate but high interest means fix your deliverability (you cracked the code, but messages aren't landing).

Low on both? Kill it immediately.

One campaign example: 5.2% reply rate but zero interested replies. That tells you exactly what went wrong: the audience responded, but the pain-to-value match was off.

Another campaign with the same audience but different messaging hit nearly 100% interested replies. Same people, different system.

Feed AI your CRM data for smarter hypotheses

The strongest campaign ideas come from two places: actual client conversations (record calls and extract the exact language prospects use to describe their problems) and AI trained on your specific data.

Build a context repository with your CRM data, case studies, and client interviews. When you ask AI for campaign ideas from this enriched context, you get specific, tested hypotheses; not generic "reach out to startup founders" suggestions.

The bottom line

Outbound isn't broken, your system is. Build separate sending infrastructure for each email provider, test hypotheses systematically in small batches, and let data tell you whether to scale or pivot.

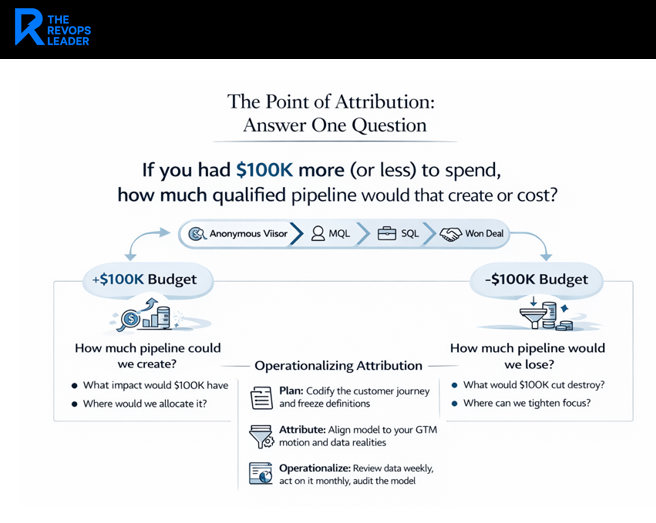

3. Attribution should answer one question: if you had $100K more (or less) to spend, how much pipeline would that create or cost?

RevOps Masterclass: From Dashboards to Decisions webinar (Jan 29, 2026)

TLDR:

Alex Biale from Domestique has watched companies waste months perfecting attribution models that nobody uses. His philosophy: "If you can make a decision on it, it's good enough."

Start with a codified customer journey and frozen definitions before building any attribution model

Establish a weekly/monthly/quarterly operating cadence to actually use attribution data for decisions

The only question that matters

Can you answer this: if marketing had an extra $100,000 next quarter, how much qualified pipeline could you create? Or the inverse: if your budget gets cut by $100K, what pipeline disappears?

That's the entire point of attribution. Not first-touch versus last-touch debates. Not getting credit exactly right. Attribution is a tool for making investment decisions. If your model can't answer that budget question, fix that first.

Start with lifecycle definitions, not the model

The most common mistake is building attribution logic before aligning on what things mean. What counts as a lead? A marketing qualified lead? Qualified pipeline?

Create a "Rosetta Stone" – documented lifecycle stages that everyone agrees to. Then freeze those definitions. Don't change what counts as pipeline mid-quarter, no matter how tempting. It destroys your ability to compare performance and erodes trust in the model.

Choose your model based on your motion

First-touch attribution works best for early-stage companies focused on top-of-funnel awareness. Last-touch works for tracking conversion moments. Linear models help established companies see what shows up consistently quarter over quarter.

For B2B specifically, they recommend position-based models (sometimes called W-shaped or full-path) because you want to know what sourced the first anonymous visit, what created the record, what created the opportunity, and what influenced the close – multiple milestones, not just one.

The operating cadence makes it real

Building dashboards means nothing if nobody looks at them. Their recommended rhythm:

Weekly: The person managing attribution reviews data coverage and anomalies. Are records missing UTM campaigns? That's a red flag.

Monthly: Use attribution insights to inform budget shifts. Which channels show increasing traction? Which bets from two months ago aren't working?

Quarterly: Audit the full model, freeze any taxonomy changes, and plan the next quarter's experiments.

Where AI helps, and where it doesn't

AI can help with automated QA (catching missing UTM fields), anomaly detection, and scenario planning. But don't let AI make your crediting decisions in a black box without an audit trail. "It can hallucinate," Alex cautioned. "It can help check your measurement. Just don't let it quietly decide your crediting rules."

The bottom line

Your 30-60-90 action plan: partner with RevOps to freeze customer journey definitions. Fix your UTM hygiene and channel groupings (tedious but essential). Pick one model with a handful of metrics to measure. Publish a coverage score to hold yourself accountable. Then run the operating cadence weekly, monthly, and quarterly so attribution actually informs decisions instead of gathering dust.

Disclaimer

The RevOps Leader summarizes and comments on publicly available podcasts for educational and informational purposes only. It is not legal, financial, or investment advice; please consult qualified professionals before acting. We attribute brands and podcast titles only to identify the source; such nominative use is consistent with trademark fair-use principles. Limited quotations and references are used for commentary and news reporting under U.S. fair-use doctrine.